Create hashes (checksums) for the content of JPEG files and other image types to identify duplicate images and image changes.

I've used MD5's for some time now to keep any eye on duplicate files and changes in

files that I don't want to change. But when it comes to images the standard routine of just running an MD5 over the whole file doesn't cut it. The problem with image files (and many other types of files) is that the file can change without the image content actually changing, this might happen for example if you add meta data to the file, or the image is opened and resaved without any editing but changing the layout and structure of the file. Now while these are indeed changes to the file content and indeed invalidate a complete file hash this is not always desirable, you see what I am concerned about is image content. So what I wanted was a more fine grained way of identifying if an image file had changed and if so whether the actual image content had changed or just some other meta/extra data in the image file. What ImageImprint does is generates up to four image hashes (2 for any image file, 4 for JPEG files) that provide more insight on whether an image file has changed and the nature of the changes.

The four types of image hash ImageImprint can produce are as follows:

- Full File Hash: The same as any other hashing program, runs the hash over the entire file. It can be used to identify identical files and any changes in the file.

- Generic Image Hash: Renders the image to a standard format and then hashes the result. It can be used to identify identical images (even when the file format and encoding scheme vary "in some instances: non lossy") and changes to an image.

- Minimal Jpeg Hash: Only for JPEG images. Reduces the JPEG to its most minimal form in a standard format and hashes the result. Can identify identical images that were derived from the same base encode and identify changes to a JPEG raw image data.

- SOS Jpeg Hash: Only for JPEG images. This is really the kernel of a JPEG file, it is basically the raw image data but doesn't contain enough information to reconstruct the full image (the coding key information is missing). You can think of this like the image information with the colour space missing, or a compression algorithm with the key lookup table removed, I guess it is somewhere between those in reality. This really automates the second method in this process. Can be used in much the same way as the above hash, but does not guarantee complete JPEG integrity, and therefor can be used in some instances where a JPEG is more significantly reorganised (say optimised) without the actual image changing.

Hopefully I'll end up getting this on a wiki so that users can update it to be more useful but for now if you have any comments or suggestions, just leave them as posts.

Release Version

Click here to download the latest version of ImageImprint (V 0.9.0.0).

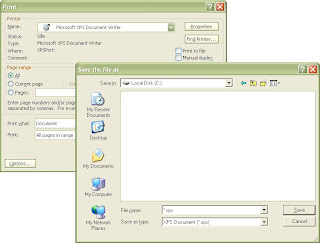

Screen Shots

Usage

This is the same as "

-h" but a little more detailed.

To run the program using these options you either need to create a shortcut to the application and then add the comandline options to the target line:

or run the program from the command prompt and append the command line options:

Usage: YarragoImageImprint_V_0_9_0_0.exe [options]

-l: Displays the programs licence.

-file: The file that ImageImprint will produce hashes for. ImageImprint only runs in file mode or directory mode, if both are specified file mode takes precedence.

-d {directory}: The directory that ImageImprint will produce hashes for.

-s {log}: Save the hash data to file instead of displaying it. Instead display the status.

Status: Start - Current - Files Processed - Second Per Files - Instantaneous Second Per Files (the number of seconds it took to process the last file).

-nr: The default operation of the program is to recursively process all sub directories in the base directory. This option stops the behavior so that only files directly in the base directory are processed.

-fs: Write out file size.

-f: Generate a full file hash.

-j: JPEG files only. Generate a minimal JPEG file hash.

-jffo: Only use the first frame in the JPEG image (only effects -j and -sos, does not effect -i). Some JPEGs contain multiple images, if this option is used only the first frame will be used for the JPEG hashes and subsequent images are ignored.

-sos: JPEG files only. Generate a hash based only on the SOS segment(s).

-w {directory}: Write out the hash data files for the minimal JPEG files and/or SOS segments to this directory (implies -j if it is not used).

-i: Generate the generic image hash (for any known image type).

-nv: Do not verify images when using the generic image method (this speeds up processing time considerably).

-debug: Run the debug test (takes time and does not produce a hash, but checks the JPEG hashes produced are sane).

-hash {algorithm}: Change the algorithm used to produce the hash (md5, sha1). Default if not specified is MD5.

-v {version}: The JPEG imprint version to use. Not supported in the current version but will be available in future versions if the hash algorithms change so you can verify against old hashes you produced. This is for legacy usage only and you should always generate new hashes with the most recent version of the algorithm.

-qv: Gets the program versions.

-np: Do not prompt to enter a key on exit.

Here is a sample command line I would typically use:

YarragoImageImprint_V_0_9_0_0.exe -f -j -sos -i -d "C:\pathtoimagefiles\ " -s "C:\ImageImprintLog.csv"

Here is a sample command line I would use if I was debugging or wanted to see what was going on:

YarragoImageImprint_V_0_9_0_0.exe -f -j -sos -i -d "C:\pathtoimagefiles\ " -s "C:\ImageImprintDebugLog.csv" -w "C:\writefileshere\ " -debug

Sample Usage

There are a number of tasks that ImageImprint will help you do:

- Identify identical duplicate images.

- Ensure that images aren't becoming corrupted over time either though disk corruption or human error.

- Identify if a program is capable of performing an operation losslessly. (Really just a permutation of the above).

- Produce minimal JPEG files (without metadata). This serves a couple of purposes:

- It can let you produce minimal files with identical images for further use or distribution. (E.g. If you want to produce images for distribution that don't have any metadata).

- It can let you debug my program for me, you can see what is being produced and determine whether there is a problem with it.

What I typically do is generate the hashes for a collection of files and have the program save them to a .csv file using a command like:

YarragoImageImprint_V_0_9_0_0.exe -f -j -sos -i -d "C:\pathtoimagefiles\ " -s "C:\ImageImprintLog.csv"

To find duplicates I then open the result in spreadsheet software and sort each type of hash (one type at a time) and use a formula to match identical hashes.

To find changes I use some method (spreadsheet or

BeyondCompare) to compare an old log file with a current log file to find differences.

If you have a single photo you are interested in you should just be able perform the comparison by hand.

I have produced a set of sample images and hashes to better explain what the different hashes mean in a real world context.

First download the sample images from here.

Lenna is the standard test image so I wont depart here.

Here is a table showing the each type of hash for a number of images, these are MD5's which I have shortened to just the first 3 bytes and last 3 bytes so 137BC77A0D150F3C6149755C968A38DE becomes 137...8DE.

| File Name | Full File | JPEG | SOS | Generic |

| Image_Sample.bmp | 137...8DE |

|

| 225...169 |

| Image_Sample_RGB_Data.dat | 225...169 |

|

|

|

| Lenna.bmp | 303...ACC |

|

| 2DB...041 |

| Lenna.tiff | 727...27E |

|

| 2DB...041 |

| Lenna_Jpeg_Standard.bmp | 06A...BF3 |

|

| 081...FC5 |

| Lenna_Minimal.jpg | B1B...376 | B1B...376 | 181...C61 | 081...FC5 |

| Lenna_Minimal_SOS_Segment_Only.sos | 181...C61 |

|

|

|

| Lenna_Progressive.jpg | E11...2E1 | 350...C99 | AE3...8D7 | 081...FC5 |

| Lenna_Standard.jpg | 587...0C9 | B1B...376 | 181...C61 | 081...FC5 |

| Lenna_Standard_Comment.jpg | 82A...320 | B1B...376 | 181...C61 | 081...FC5 |

| Lenna_Standard_Meta_1.jpg | 9B9...1F6 | B1B...376 | 181...C61 | 081...FC5 |

| Lenna_Standard_Meta_2.jpg | 108...790 | B1B...376 | 181...C61 | 081...FC5 |

| Lenna_Standard_Restart.jpg | 85E...194 | 729...99A | 33E...D76 | 081...FC5 |

Lenna.tiff: The original image file.

Lenna.bmp: A resave from the original image. The file hash differs from the original because the file format is different, but the generic image hash stays the same because BMP is lossless and so the image is identical to the original.

Lenna_Standard.jpg: A resave from the original. Again the file hash differs because it is a different file. The image hash also differs from the original because JPEG is a lossy compression and so the actual image is different. We also get a JPEG and SOS hash because it is a JPEG.

Lenna_Jpeg_Standard.bmp: A resave from Lenna_Standard.jpg. The generic image hash stays the same as Lenna_Standard.jpg because BMP is lossless.

Lenna_Minimal.jpg: This is the same as Lenna_Standard.jpg but is the minimal JPEG (as written out by ImageImprint), both JPEG hashes for both files are the same. The file hash and JPEG hash for this file are also the same, because the image is already in its minimal form and so when it is processed the same image is produced.

Lenna_Minimal_SOS_Segment_Only.sos: This is a file that just contains the SOS segment from the Lenna_Standard.jpg image. You can see that the file hash matches the SOS hash from all images derived from Lenna_Standard.jpg.

Lenna_Progressive.jpg: A resave of the original image as a progressive JPEG. The JPEG hash differs because the raw JPEG data is totally different, but because GIMP can save identical progressive and standard JPEG images the generic image hashes match.

Lenna_Standard_Comment.jpg: A resave of Lenna_Standard.jpg with a comment added to the file. The file hash changes because the file has varied, but the JPEG, SOS and generic image hashes match because the JPEG image data hasn't been altered.

Lenna_Standard_Meta_1.jpg: Much the same as above.

Lenna_Standard_Meta_2.jpg: Much the same as above.

Lenna_Standard_Restart.jpg: Much the same as progressive, except that it is a standard JPEG image with restart markers.

Image_Sample.bmp: This is a test file I created to demonstrate how the generic image hash works. It is a normal 24bit BMP test image.

Image_Sample_RGB_Data.dat: This is the raw data that is generated by the generic image hash function, hence the file hash is the same as the generic image hash for Image_Sample.bmp.

Analysis

The following is a description of what the program does and a bit of background on how it works.

Generic Image

Raw pixel data BGR 8 bytes per pixel (why BGR? because System.Drawing.Imaging.PixelFormat.Format24bppRgb is actually BGR which I expect is because of the

endianness of the machine it is running on) and I didn't really want to add the overhead of reordering (it really doesn't matter so long as it is standard). Starting from the top left pixel and then working across the top row in order and then the second top row and so on until the far right pixel in the bottom row is reached.

Here is the data that is produced to hash (the linebreaks and spaces are formatting niceties only).

0000FF 0000FF 0000FF 0000FF 0000FF

00FF00 00FF00 00FF00 00FF00 00FF00

FF0000 FF0000 FF0000 FF0000 FF0000

0000FF 00FF00 FF0000 0000FF 00FF00

FF0000 0000FF 00FF00 FF0000 0000FF

00FF00 FF0000 0000FF 00FF00 FF0000

0000FF 0000FF 0000FF 0000FF 0000FF

FF0000 FF0000 0000FF 00FF00 00FF00

00FF00 00FF00 0000FF FF0000 FF0000

0000FF 0000FF 0000FF 0000FF 0000FF

If you want to see how it actually works you can pump it through

an online hex to MD5 converter (

such as the one here) to see that the hash produced is the same as in the table above.

For those of you that better understand the JPEG standard you may be interested to know in more detail exactly how the JPEG hashes work. For those of you who are interested but don't know how JPEG files work I suggest you take a look

here,

here or

here if you are really keen (Appendix B) and then come back when you have the basics down pat.

When generating a hash for JPEG a minimal JPEG file is generated (you can write it out and examine the contents using the "-w" command line switch). The minimal JPEG only uses the segments found below from the original JPEG and discards all other segments. The segments are then sorted into a standard order and multiple segments of the same type are joined into a single segment. In this way JPEG files that have the same content but in a differing fragmentation can be converted to a uniform structure leading to identical output images and therefor image hashes.

Jpeg Standard Format

SOI

[Tables/Misc]

SOF

[Tables/Misc]

SOS[...More SOS's...]

EOI

Tables/Misc:

DQT

DHT

DRI

All tables joined into a single segment. Tables ordered by decoder id, then by precision (8 bit then 16bit for DQT's) or table type (AC then DC for DHT's). If the id byte is identical (decoder id + other info) is the same, then the tables are left in the order they appear in the image, in practice I don't think this should happen.

The following are some information and caveats that I have identified with each hash.

- Full File Hash: File hash changes even when the actual image content stays the same.

- Generic Image Hash: Images that contain higher then 24bit fidelity may be incorrectly paired with images using 24bit or above (because the image is effectively flattened into 24bits). I have not tested images with an alpha channel. No guard against image skewing (I.E. you could combine the entire image onto a single row or any combination of rows, so long as the pixel order is the same and generate the same image), but in practice I don't think this will ever happen. Some image types can contain multiple images in the file, this hash is only ever run over the primary image in the file (subsequent images are ignored). This type of hash takes the longest because it uses the standard .NET image conversion function which somehow verifies the whole image and this process seems to take a while (and for this reason this type of hash is probably more robust and less error prone). It also uses up considerably more resources (RAM) because the full image must be decompressed to this raw format and for even reasonable sized JPEG's this can mean a whole bucket load of raw data (yes this could be done slightly more efficiently).

- Minimal Jpeg Hash: There are a number of scenarios that could lead to a minimal JPEG that are different even though the image is truly identical and this is why you have the other hashes to provide some fall back against this. Will not identify more complex changes then simple reorganisation and fragmentation file changes (GIMP can produce identical progressive vs standard images, which leads to grossly different underlying data but identical images).

- SOS Jpeg Hash: The image could become corrupt (conceivable the DHT, or DQT tables could become corrupt) without altering the hash.

Obviously the full file hash will be stable over time. I also imagine that both the JPEG hashes will be stable, at least up to a point, with bug fixes been included in later versions but the version commandline option providing backward historical compatibility for comparison against existing hash logs. However I envisaged that the generic image hash would not necessarily be stable because of the way it is implemented (I'd really like to get it to RGB one day, it just seems nicer), so don't rely on it for long term achieve verification alone (well at least not yet).

The JPEG methods perform some file type validation (they make sure that the structure seems fine and that the critical segments are there but they don't check the data in the segments are sane. For this reason if you want to be sure that your image files are sane to begin with you are better using a different program or running the generic image hash which make use of Microsoft's image validation, although I'm not sure how this works and what its tolerance is like.

As a result of the above it is best to use a combination (a couple) of the above for most tasks, hence giving you further insight on how the file and/or image has changed.

Testing And Debugging

This program was really only designed for my personal use, I didn't intend releasing it. I've only released it because I thought it might be useful to someone else. As a result the error handling is not as robust as it could have been, because I figured if there was a problem I would identify it when it occurred and I would treat it well and would only give it valid data. So go easy on it and be careful, it isn't the most robust code I've ever written but it does work well if you give it valid data (I.E. directories that actually exist). If you have a genuine repeatable bug you can produce with reasonable input let me know and I'll see what I can do to fix it. I'd say its somewhere between Alpha and Beta level code. I have successfully run the program over my photo collection which has in excess of 50,000 JPEG's and am fairly confident with the output. Running it over this collection from a network drive with the "-debug" option (which takes significantly longer) took around 2 full days.

Here are a list of bugs that have been identified that I'm aware of but that I haven't got around to fixing yet:

- When files are written out they are only valid if a valid hash is generated. I have not actually experienced this bug, but I expect that this is what happens if the input file is not valid.

So this is where you can come in, the program works well and if you treat it well you can get a lot out of it, but if you do find an error let me know and I will try to fix it. I provide the software and you provide the testing win-win. The most untested component is the command line interface, because up until just about the release version I just modified the parameters in code (it meant I didn't have to have spend time on something that when I was using it myself I didn't need).

Debug checks you can run for me:

- Run the debug check for all your files and let me know if there are any failures.

- There were a few other errors I was going to get you to check for me but I can't think of them right now? Oops.

The checks that the debug option performs are as follows:

- They checks only apply to the custom JPEG processing I have written.

- Check that the minimal JPEG that is produced matches (image wise) the original image.

- Check that rehashing the minimal JPEG produces the same result.

Plugins

I see a few plugins in the future of this program so that users can generate these hashes as they download their images from their camera or card as part of their workflow. If you are from a company that produces this software you have two options, either write the plugin your self that makes use of the commandline interface of the program or give me a free version to work with and if I find time I will write the plugin for you.

Interested in JPEGs in detail or Photo Management

In the course of developing my program I was doing a bit of in depth reading on how JPEG files work and I came across the following site:

http://www.impulseadventure.com/photo

I think its really after my own heart on a lot of digital photography management and its really worth a look.